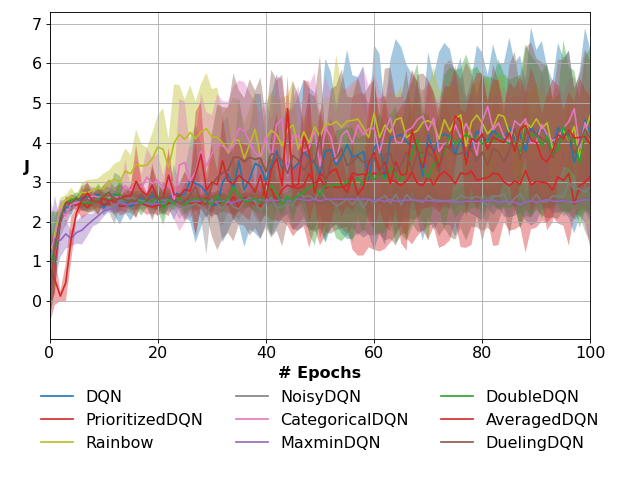

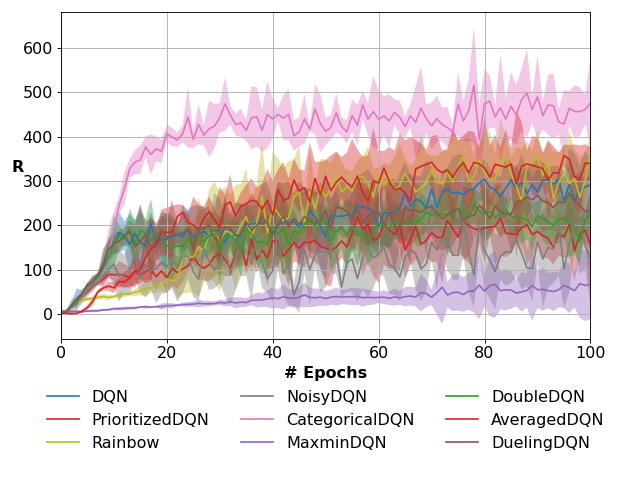

Atari Environment Benchmark

Run Parameters |

|

n_runs |

5 |

n_epochs |

200 |

n_steps |

250000 |

n_episodes_test |

125000 |

BreakoutDeterministic-v4

AveragedDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_approximators: 10

n_steps_per_fit: 4

network: DQNNetwork

target_update_frequency: 2500

CategoricalDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_atoms: 51

n_features: 512

n_steps_per_fit: 4

network: DQNFeatureNetwork

target_update_frequency: 2500

v_max: 10

v_min: -10

DQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_steps_per_fit: 4

network: DQNNetwork

target_update_frequency: 2500

DoubleDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_steps_per_fit: 4

network: DQNNetwork

target_update_frequency: 2500

DuelingDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_features: 512

n_steps_per_fit: 4

network: DQNFeatureNetwork

target_update_frequency: 2500

MaxminDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_approximators: 3

n_steps_per_fit: 4

network: DQNNetwork

target_update_frequency: 2500

NoisyDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_features: 512

n_steps_per_fit: 4

network: DQNFeatureNetwork

target_update_frequency: 2500

PrioritizedDQN:

batch_size: 32

initial_replay_size: 50000

lr: 0.0001

max_replay_size: 1000000

n_steps_per_fit: 4

network: DQNNetwork

target_update_frequency: 2500